Product Update

Generalist Medical AI and How To Choose The Right AI Model

- By HealthMatriX Team

- No Comments

05 Mar

This is the second blog post for HealthMatriX’s AI Learning and Awareness series.

The advancement in highly adaptable and reusable AI models holds the promise of groundbreaking improvements in the field of medicine. AI models will be used for solving general and common medical problems and these AI models will be known as Generalist Medical AI or GMAI. GMAI models boast the capacity to perform a diverse spectrum of medical tasks with minimal or no dependence on task-specific labeled data.

GMAI models, built through complex and diverse data sets, will exhibit a remarkable ability to interpret diverse combinations of medical modalities, encompassing data from imaging, electronic health records, laboratory results, genomics, graphs, and medical text. These models, in turn, will yield expressive outputs, ranging from free-text explanations and spoken recommendations to image annotations, showcasing their advanced medical reasoning abilities.

Like any other field, healthcare is also being revolutionized by AI. Decision-makers and business owners in the healthcare and life science sector finally understand the importance of using AI for their business. But this understanding is coming with a cost to their business. The surge in new AI models can be daunting and overwhelming, causing decision paralysis among corporate leaders.

This article provides a streamlined framework designed to assist health sector professionals in understanding and choosing the best AI models for their businesses. We will guide you on the basics of AI models and how foundation models for GMAI will redefine the healthcare and life science sector.

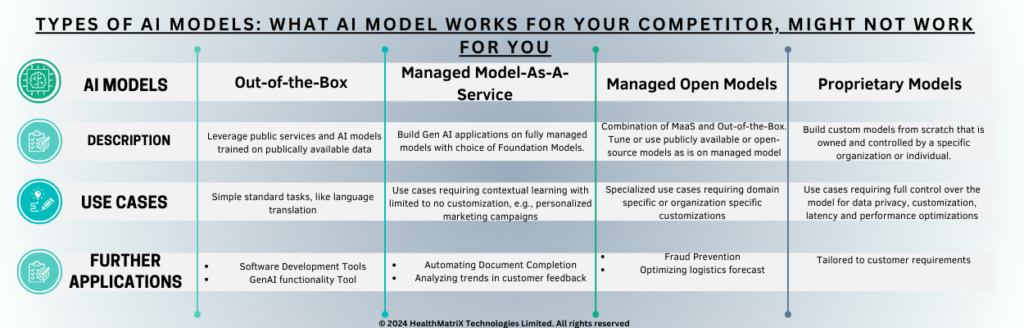

Types of AI Models

An AI model is a mathematical model that applies one or more algorithms to data to recognize patterns, make predictions, or make decisions without human intervention. There are four types of AI models:

1. Out-of-the-Box AI Model

An “out-of-the-box” AI model refers to a pre-trained or pre-configured artificial intelligence model ready for use without requiring extensive customization or additional training. These models are designed to offer general-purpose functionality and can perform general tasks without the need for fine-tuning user-specific data. They come with pre-learned features and parameters, making them accessible and usable with minimal effort or expertise.

Out-of-the-box Models Function At The Expense of Your Business:

These models are often trained on large and diverse datasets, allowing them to exhibit a degree of versatility in handling various tasks within a particular domain. But these Out-of-the-box models are not profitable for your business in the long run. This is because these models are trained on other organizations’ data, not your own, so they can’t predict the entire course of functioning specific to your healthcare or life science business. These AI models can’t generate specific analyses for your healthcare or life science business.

2. Managed Model-As-A-Service

“Managed Model as a Service” refers to a cloud-based solution that streamlines the deployment and management of machine learning models. This service reduces the complexities associated with infrastructure management in the machine learning lifecycle. With a managed model as a service, users can deploy, host, and maintain machine learning models without delving into the complexities of underlying infrastructure, server management, or scaling concerns.

These services provide a fully managed environment, enabling users to focus on model development and training. Key features often include version control, A/B testing, monitoring tools, and seamless integration with other cloud services. By abstracting infrastructure complexities, managed models as service offerings empower organizations to efficiently deploy and manage machine learning models in production, enhancing scalability and easing the overall operational burden.

3. Managed Open Models

Managed open model employs the usage of open-source publicly available models for your business after fine-tuning them. Their specialized use case includes requiring domain-specific or organization-specific customizations to an open-source model.

4. Proprietary Model

A proprietary AI model refers to an artificial intelligence model that is owned and controlled by a specific organization or individual. Unlike open-source models, which are publicly accessible and can be freely modified, a proprietary AI model is characterized by its closed nature, with the underlying algorithms, architecture, and parameters being proprietary and strictly not disclosed to the public.

Proprietary AI models are often developed by companies as part of their intellectual property and competitive advantage. The organizations that create these models retain exclusive rights to their use, modification, and distribution. Users or clients may have access to the functionalities of the model through APIs or services provided by the owning organization, but they do not have visibility or control over the internal workings of the model.

Proprietary AI models are the best for your business because they are trained on your specific data. Still, you should be careful in choosing the best AI solution provider because you will need that model provider for all of your updates and support needs.

HealthMatriX Technologies is one of the best AI solution providers for proprietary because we know the risks AI misuse poses and we are equipped with advanced technology, and cyber-secure and data-protected solutions tailored for your healthcare or life science business. We have several protocols and procedures in place for handling confidential and mission-critical data for organizations in the healthcare and life science sector.

Foundation Models For GMAI

A foundation model refers to a deep-learning neural network that undergoes training on extensive datasets. Instead of creating artificial intelligence (AI) from the ground up, data scientists employ a foundation model as a preliminary framework. This approach enables the development of machine learning (ML) models, serving as an efficient and cost-effective starting point for the swift generation of new applications.

Foundation models represent a stark change from the previous generation of AI models designed for specific tasks one at a time. Growth in datasets, increased model size, and advancements in model architectures contribute to the previously unseen abilities of foundation models. Many recent foundation models can handle combinations of different data modalities, enabling them to process and output diverse types of information.

Due to the abundance of foundational models accessible, AI engineers can help healthcare providers and medical equipment suppliers with rapid prototyping services. This advancement allows the delivery of AI prototypes within weeks, a significantly shorter timeframe compared to just one or two years ago, and at a fraction of the cost.

To get rapid prototyping services, you need to ensure that the service provider you choose has the expertise, capacity, innovation, and flexibility to develop prototypes. HealthMatriX provides rapid AI prototyping services and our state-of-the-art, innovative, and cybersecure prototyping service provides data privacy protection and scalability like no other. Contact HealthMatriX now to avail an AI prototyping service for your healthcare business.

Despite early efforts, the shift towards foundation models has not widely expanded in medical AI due to challenges such as limited access to large, diverse medical datasets, the complexity of the medical domain, and the recency of this development. Current medical AI models are often developed with a task-specific approach, limiting their flexibility.

A specific example includes a chest X-ray interpretation model trained on a dataset explicitly labeled for pneumonia, showcasing the limitations of narrow, task-specific models. Meaning that such a foundation model can only identify a chest x-ray of a person with pneumonia. Current task-specific models in medical AI typically cannot adapt to other tasks or different data distributions without retraining on another dataset.

Most of the over 500 AI models approved by the Food and Drug Administration for clinical medicine are approved for only 1 or 2 narrow tasks, emphasizing the prevalence of task-specific models in medical applications. However recent advancements in foundation models have enabled bring new concept for the generalist medical AI model: a class of advanced medical foundation models that will be widely used across medical applications, largely replacing task-specific models.

Generalist Medical AI (GMAI) models, inspired by foundation models outside medicine, possess three key capabilities that distinguish them from conventional medical AI models.

- GMAI models can easily adapt to new tasks by having them described in plain language, enabling dynamic task specification without the need for retraining.

- GMAI models exhibit flexibility in accepting different combinations of data modalities as inputs and producing corresponding outputs, contrasting with the constraints of more rigid multimodal models.

- GMAI models formally represent medical knowledge, enabling them to reason through novel tasks and articulate outputs using medically accurate language.

Applications of GMAI

Below are the six applications of GMAI:

1. Generation of Radiology Reports

GMAI can introduce advanced digital radiology assistants that will significantly reduce radiologists’ workloads. These models can automatically draft comprehensive radiology reports, incorporating abnormalities, normal findings, and patient history. Interactive visualizations, including dynamic highlighting of regions described in reports, enhance collaboration with clinicians.

2. Augmented Procedures

GMAI is envisioned as a surgical assistant capable of annotating real-time video streams during procedures. It can provide spoken alerts for skipped steps, read relevant literature, and even assist with endoscopic procedures. GMAI’s integration of vision, language, and audio modalities, along with its medical reasoning abilities, allows it to handle diverse clinical scenarios.

3. Interactive Note-Taking

GMAI streamlines clinical workflows by drafting electronic notes and discharge reports. By monitoring patient information and clinician-patient conversations, GMAI minimizes administrative overhead. This application involves accurately interpreting speech signals, contextualizing data from Electronic Health Records, and generating free-text notes, ultimately saving clinicians time.

4. Chatbots for Patients

GMAI powers patient support apps, offering personalized care outside clinical settings. It interprets diverse patient data, ranging from symptoms to glucose monitor readings, and provides detailed advice in clear, accessible language. GMAI’s capability to communicate with non-technical audiences and handle noisy, diverse patient-collected data makes it a valuable tool for patient-facing chatbots.

5. Bedside Decision Support

GMAI expands on existing AI-based early warning systems by offering detailed explanations and care recommendations. This application involves parsing electronic health record (EHR) sources, summarizing patient states, projecting future conditions, and recommending treatment decisions. GMAI leverages clinical knowledge graphs and text sources to provide comprehensive decision support at the bedside.

6. Text-to-Protein Generation

GMAI models can generate protein amino acid sequences and three-dimensional structures from textual prompts. This application involves designing proteins with desired functional properties. GMAI’s in-context learning allows it to dynamically define new generation tasks based on a handful of example instructions, offering flexibility in protein design interfaces.

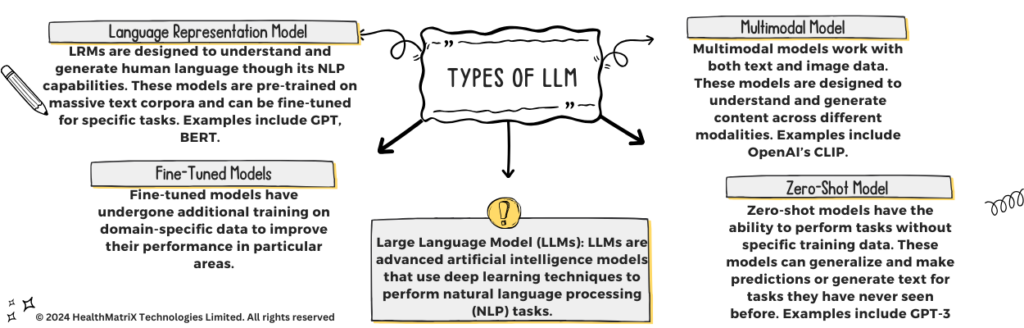

Choosing The Right AI Model For Your Business:

In light of the myriad variants of AI models currently available, it proves challenging to comprehensively address each model. For the convenience of our readers, we shall expound upon LLM (Language Model) models as an illustrative example in the selection of an AI model. It is crucial to note that the methodology for selecting an AI model is universally applicable, akin to the process involved in opting for an LLM model.

1. Define What You Need

To maximize AI’s positive impact on your business, start by pinpointing the exact issue you want to tackle. Whether it’s boosting business intelligence with an AI chatbot, hastening digital transformation via AI-generated code, or refining enterprise search with a multilingual embedding model, a clear understanding of your needs is key. Once you’ve got that clarity, sketch out a strategy integrating AI solutions tailored to your requirements.

Identify one or more focused, task-oriented use cases for your problem. Keep in mind that each use case might call for different types of LLMs to achieve your desired outcomes.

For specific use cases where complex reasoning isn’t essential, opting for smaller models – perhaps with slight adjustments through fine-tuning – is favored. This choice is driven by factors such as reduced latency and cost efficiency. Conversely, in scenarios demanding complex reasoning across diverse topics, a larger model, enhanced by dynamically retrieved information, may deliver superior accuracy, justifying the higher associated costs.

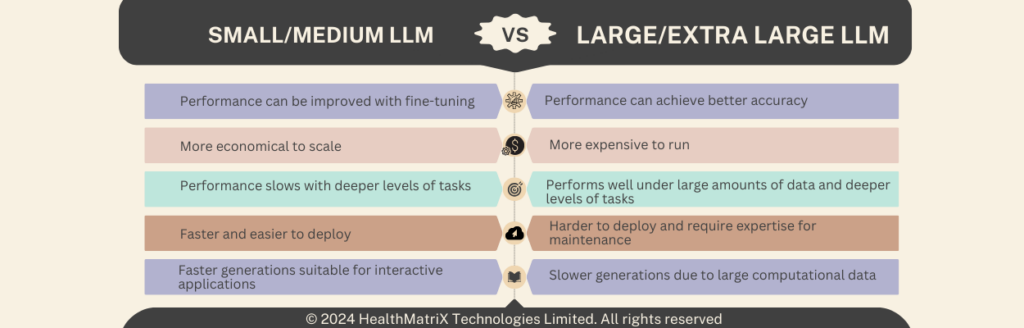

2. Choose The Size of the AI Model

The next step is to choose the size of the AI model that is suitable for your business needs. A common method for comparing model sizes is by examining the number of parameters they encompass. Parameters, serving as internal variables and weights, play a crucial role in influencing model training.

For instance, larger models having more parameters (>50 billion) are generally perceived as more powerful, and capable of tackling complex tasks. However, their increased power comes with the trade-off of requiring more computational resources for execution.

Although parameter count serves as a useful metric for model size, it doesn’t fully encapsulate the comprehensive dimensions of a model. Achieving a holistic view involves considering supporting architecture, training data characteristics (e.g., volume, variety, and quality), optimization techniques (e.g., quantization), transformer efficiencies, choice of learning frameworks, and model compression methods. The intricate variations in models and their creation processes complicate direct, apples-to-apples comparisons.

3. Review The Sourcing Options

Type and size aren’t the sole considerations in model comparisons. After identifying several AI models, businesses must decide how to build or source the Large Language Models (LLMs) that form the foundation of their applications. LLMs use statistical algorithms trained on extensive data volumes to comprehend, summarize, generate, and predict text-based language, with building and training a high-performing model often incurring costs in millions.

Businesses typically have three main options for LLM sourcing:

- Developing an LLM in-house from scratch, utilizing on-premises or cloud computing resources.

- Choosing pre-trained, open-source LLMs available in the market.

- Employing pre-trained, proprietary LLMs through various cloud services or platforms.

Many organizations lack the necessary expertise, funding, or specific needs to justify building an LLM from scratch, making options 2 and 3 more practical and efficient for sourcing and training LLMs.

Comparing open-source and proprietary models can be challenging, especially when considering the required infrastructure for building a scalable AI application. While open-source tools may seem initially cost-effective, evaluating all criteria for implementation, launch, security, and support reveals a less convincing picture.

To comprehend the distinctions between open-source and proprietary models, we recommend assessing multiple criteria beyond upfront costs. This includes factors like time-to-solution, data origin, and data security options, the level of support, and the frequency of updates to the models.

For our reader’s ease, we have made a comparison table between Open-source and proprietary models:

| AI Model | Open-Source Model | Proprietary Model |

| Cost | Often free or low cost, but deployment costs are very high compared to proprietary models | It mostly requires a subscription or usage fee. The majority of proprietary AI solution providers offer free trials. |

| Support and Updates | Community-based support. Updates may be irregular. | Professional onboarding support and deployment engineers are available. Regular updates are provided |

| Time-to-solution | High time-to-solution as it requires much expertise to integrate the model for a specific use case | Low time-to-solution due to the integrated AI offerings already tailored for the specific enterprise use case |

| Data provenance | Lack of transparency into the data used to train the models makes it difficult for enterprises to use in applications | Rigorous checks on source data ensure higher compliance satisfaction |

For enterprise applications demanding the best security and transparency, the most suitable choice is often pre-trained proprietary Large Language Models (LLMs). These models can be accessed through various APIs, integrated into partner cloud networks, or directly deployed on-premises. Opting for these models facilitates swift implementation and offers advanced capabilities, including HealthMatriX, to meet a large range of enterprise needs.

Scaling The AI Model

Once your proof-of-concept is successful, start scaling your chosen AI model to your entire business area. Transitioning from Proof of Concepts (POCs) to full production requires a comprehensive understanding of how scaling AI applications impacts costs, performance, and Return on Investment (ROI). Beyond considering model type, size, and sourcing options, evaluating supporting infrastructure and model-serving capabilities is crucial. This approach is essential for aligning strategies with your objectives and achieving initial goals.

Begin by examining data residency requirements to determine whether a multi-tenant, hosted, API-based solution or a more secure, isolated solution is preferable based on your needs.

Estimate the volume and traffic you intend to handle, considering factors like rate limits for hosted APIs that may impact user experience. Identify the required skills, from prompt engineering to model training, and ensure the availability of these skills in-house through upskilling or recruitment.

Consider the costs and benefits of different solutions, factoring in elements like adapting pre-trained models or utilizing retrieval-augmented generation for dynamic data. Assess your operational capability and evaluate whether to run AI deployments in-house or use externally managed services, considering platforms like AWS Bedrock or HealthMatriX.

In short, while selecting the right AI model, don’t rush, but invest time in understanding the cost, performance, and risks to confidently derive significant value from your AI application.

About HealthMatriX Technologies Limited

HealthMatriX Technologies Limited provides AI-enabled anti-counterfeit QR codes, NFC/UHF tags, and cutting-edge technology development solutions to protect the products and documents for the healthcare and life science sector. HealthMatriX also provides tamper-evident physical products, highly advanced AI-automated software integrations, and white-label solutions. Our cybersecure, data-protected, standardized, and innovative solutions help safeguard healthcare brands, products, solutions, and documents from unauthorized reproduction and duplication.

Read the third blog post for HealthMatriX’s AI Learning and Awareness series here.

Recent Posts

About Us

Contact Info

HealthMatriX Technologies Limited

Unit 8, 18/F, Workingfield Commercial Building, 408-412 Jaffe Road, Wanchai, Hong Kong +852 2523 9959HealthMatriX Technologies Pte. Ltd.

192, Waterloo Street, #05-01 Skyline Building Singapore 187966© 2022 HealthMatriX Technologies Pte. Ltd. All rights reserved.

HealthMatriX provides AI-enabled anti-counterfeit QR codes, NFC/UHF tags, and cutting-edge technology development solutions to protect the products and documents for the health sector. HealthMatriX also provides tamper-evident physical products highly advanced AI-automated software integrations and white-label solutions.

About Us

Contact Info

HealthMatriX Technologies

[email protected]HealthMatriX Technologies

Unit 8, 18/F, Workingfield Commercial Building, 408-412 Jaffe Road, Wanchai, Hong Kong+852 2523 9959

© 2025 HealthMatriX Technologies. All rights reserved.